Explore tools, strategies, and resources for higher education. Discover insights to enhance teaching, learning, and technology in colleges and universities.

How many times have you started to prep your asynchronous online courses and felt stuck on how to make them more engaging? But you may ask, what do we do then? How can we ensure that students, along with their instructor, are participating meaningfully, interacting and learning from each other?

Background: Online Courses and Substantive Interaction

To give a little context, starting back in 2021, the Department of Education revised a few regulations for specifically distance education courses that gave much needed clarity on “regular and substantive interaction.” It has provided more guidance and explicit instances of what constitutes regular and substantive interaction.

For example, instructor-led discussion forums are consistently added throughout the course to facilitate engagement between students and between instructor and students. Another example is the extent to which the instructor posts and engages in the discussion forums with students. The frequency of instructors participating should be consistent and high. This would be in any number of various forms whether encouraging students, providing alternative perspectives, or posing questions.

Requirements and Best Practices

Likewise, most online programs benefit from some kind of certification program that promotes best practices. For example, Quality Matters (QM) is a nationally recognized program that uses research-based best practices and a peer-review process to certify the quality of online courses and their components. The newest version of the Quality Matters rubrics requires varied interaction, which requires learner-learner, learner-instructor, and learner-content interactions.

Now that we know interactions are needed, how can we revamp the discussion board forums to intentionally develop opportunities for students to engage with each other, with the content, and with the instructor.

I have connected most of these activities to existing research theories to further align my instructional design. Siemens article (2004) developed Connectivism, which seeks to define how learning occurs in the digital age with explicit use of technology that supports and influences new learning. Likewise, Vygotsky’s Theory of Cognitive Development aligns to many of these activities because of the increased social interaction.

Let’s check out some alternative ways to encourage deeper, more meaningful and participatory discussion board forums.

1. Virtual Stations

Virtual stations are a strategy where students are put into small groups to visit a ‘station’ or area with different materials, text, activities to interact with or complete. It can be collaborative or cooperative groupings. Some ubiquitous and collaborative apps can be Wakelet, Canva Whiteboard, Figma, or Microsoft Whiteboard

Figure 1: Virtual Stations on Figma

I have used virtual stations as individual work or collaborative groups. They complete this prior to posting in the discussion thread. One instance is having them brainstorm some ideas or sorting the ideas and then developing their response.

Virtual stations are quite easy to deploy and if a particular module has multiple topics to cover, this would address all of them and provide choice to your students.

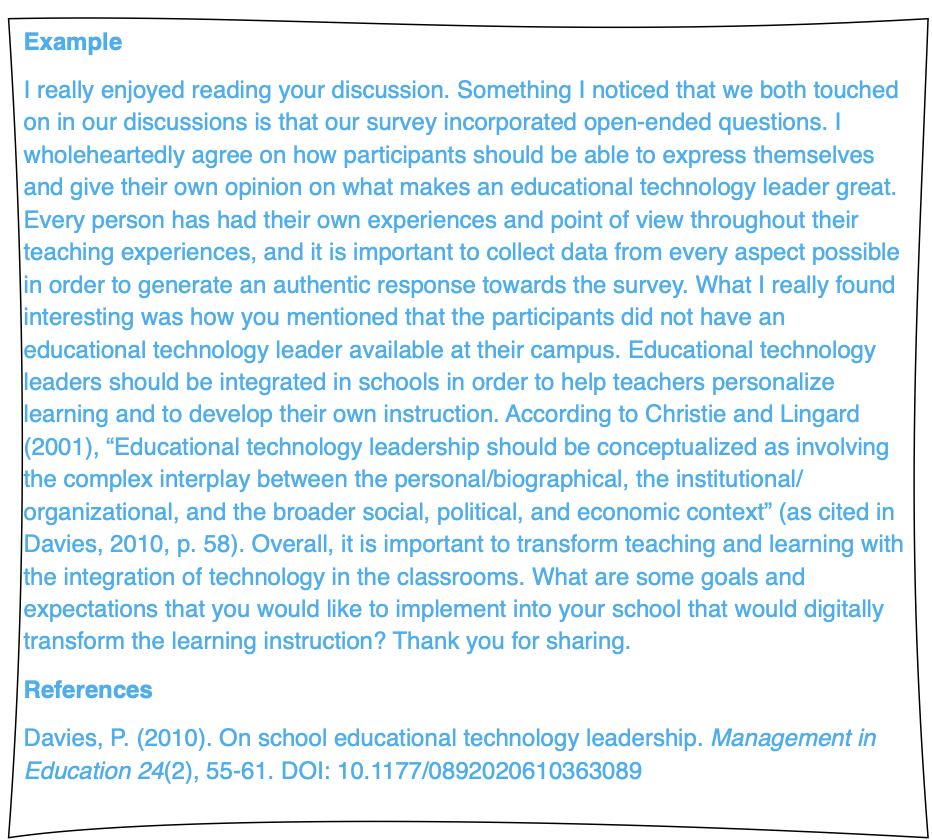

2. Discussion Board Response: 3C + Q by Jenn Stewart-Mitchell

Big, huge shout out to Jenn Stewart-Mitchell who develop this quick, easy strategy for more concise direction and beneficial discussions and feedback. The 3C+Q is effective as it gives much more direction instead of just saying “reply to 2 peers.”

- Compliment – Compliment shared ideas or something you liked or found interesting. Be specific in stating what you liked, found interesting, or learned something new. This is something you read that had a positive impact on you.

- Comment – After reading the post, you comment to something that you found “relevant or meaningful” (Stewart-Mitchell, n.d., “Comment”). With your comment, you can be more specific in your level of agreement in the post holistically or a specific portion. An example would be to state, “I agree with…” or “I do not agree” or similar phrase.

- Connect – Using the comprehension skill of making connections, connect with a specific portion of the post. For example, the making connections comprehension skill emphasizes 1) text to text, 2) text to self, and 3) text to the world. Some examples that Stewart-Mitchell provides are 1) I can connect with you about … 2) I once read a story about … 3) I had the same thing happen to me…

- Question – The question portion of your response, circles back to continue the dialogue with your peer. You would ask a question in relation to the post holistically or a portion of post. The question can be a follow up question to ask for more detail about a process or event, request more information about something, ask their opinions, or ask about their experience.

Figure 2: 3C+Q Student Example

An alternative is the T-A-G Feedback Protocol (author unknown). T-A-G is short for Tell-Ask-Give and just like the 3C+Q method, provides better direction in how to respond to others in the discussion. I, too, follow these patterns when they are added in the discussion instructions. T represents tell, which the student identifies 2 positive statements identifying strengths. A is for ask and the student provides two to three follow-up questions that addresses confusing areas. The last letter, g, is for give. Students give four suggestions to tweak/revise/improve the initial response.

I really enjoy this particular strategy for discussion replies as it give specific direction to students. It goes beyond agreeing and sets the tone that the expectation is to really dive into the conversation. If you have any particular feedback strategies such as this one, share with us in the comments.

3. Synchronous Meetups

This is rather simple in theory, but sometimes allowing students to meet for a discussion board prompt to discuss their thoughts before posting is a great way to increase their engagement. Use any virtual conference call apps for student interaction for dynamic discussions synchronously and virtually. They join a group and schedule a time to meet on their own with the group. After, they post their thoughts. Conversely, you can embed a synchronous meetup in lieu of the written discussion post.

I have implemented both types. In the course meetup, I have scheduled one towards the end of the course to discuss the topic of the week, recap a few items from previous weeks, and look at the application of the course content for their future.

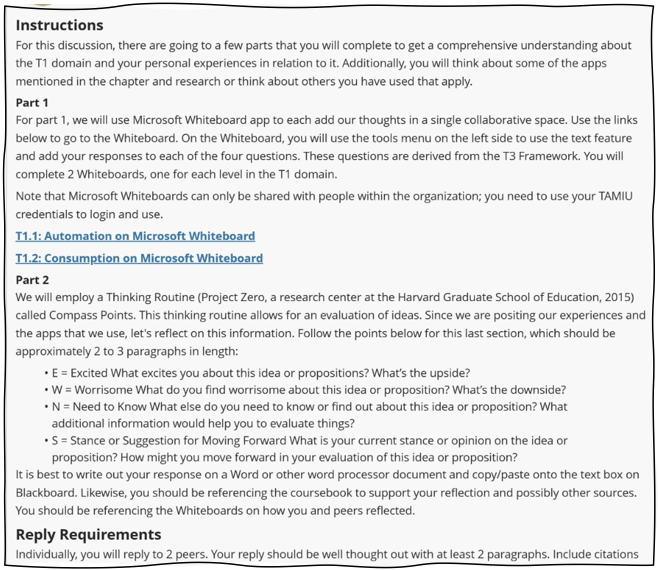

4. Project Zero’s Thinking Routines

I will always share my love for Harvard’s Graduate School of Education’s Project Zero’s Thinking Routines. These routines are evidence-based, effective, and easy to implement in various activities. The thinking routines give explicit instructions about thinking in certain ways or certain contexts. Online, I may use them as part of the initial prompt, during their engagement and group discussions, or as their replies to others. There are prompts for all thinking situations.

Examples

- The 4 C’s. This thinking routine explores discussion based on a specific text or topic.

- Connections: What connections do you draw between the text and your own life or your other learning?

- Challenge: What ideas, positions, or assumptions do you want to challenge or argue with in the text?

- Concepts: What key concepts or ideas do you think are important and worth holding on to from the text?

- Changes: What changes in attitudes, thinking, or action are suggested by the text, either for you or others?

- Think, Puzzle, Explore. This routine asks questions ‘deeper inquiry.’

- What do you think you know about this topic?

- What questions or puzzles do you have about this topic?

- How might you explore your puzzles about this topic?

Figure 3: Thinking Routine: Compass Points

Thinking Routines are incredibly easy to implement. Try 1 the next time you are developing or revising your discussion thread.

5. Buzz Groups

Buzz Groups are a cooperative learning strategy where temporary, small groups form to discuss a topic. The task is to reach a solution or consensus together. This is a cooperative (and differentiated) learning strategy because there are some roles involved: scribe, timekeeper, and spokesperson.

I used this for a specific week that had quite a varied topic list. I wanted to have students engage with each other before posting for increased engagement. Moreover, it could give additional context to students who are not familiar or have no experience. You will notice, in this instance, I provided a topic with opposing or polarizing views.

In the discussion forum instructions, they first needed to form groups by joining a buzz group of their choice based on the topic. Then, the group scheduled to meet and discuss the topic.

Examples:

- Buzz Group 1: BYOD: BY-Oh-Yes or B-Y-E-no?

- Buzz Group 2: Online safety and security: Monitor and protect or block it all?

- Buzz Group 3: Broadband: All for one and one for all? Access in and out of school (home).

- Buzz Group 4: Makerspaces: Magic spaces or wasted spaces?

- Buzz Group 5: Should it be Acceptable or Responsible Use Policies?

After the students have synchronously met, they reflect on their discussion and post their initial response. I have also directed them to develop the initial response together. Buzz groups can give excitement and motivation to discuss the topic that they are interested in.

I employed buzz groups in two different courses. In both, students are much more engaged in sharing their ideas and the group discussions. Many seem to have a positive outlook as they find similar experiences with other students.

6. Professional/Expert Interviews

Every industry is filled with experienced individuals who can provide additional insights. Students expand their network and gain a new perspective from someone who is directly involved with it.

Match up your students with experts in the field for brief interviews. The interviews are recorded, either by audio or video, and posted to the discussion board with a brief reflection. There was so much insight gleaned as the students developed the questions based on the topic provided and aligned with what was covered for the week.

For my example, the students are enrolled in a Master’s program and the course is about ed tech leadership. They interviewed current CTOs or other directors or coordinators whose focus is on education technology.

In reflecting back, there was plenty of engagement and a-ha moments as they learned more from the interviews about the role and responsibilities than the did from just the course book.

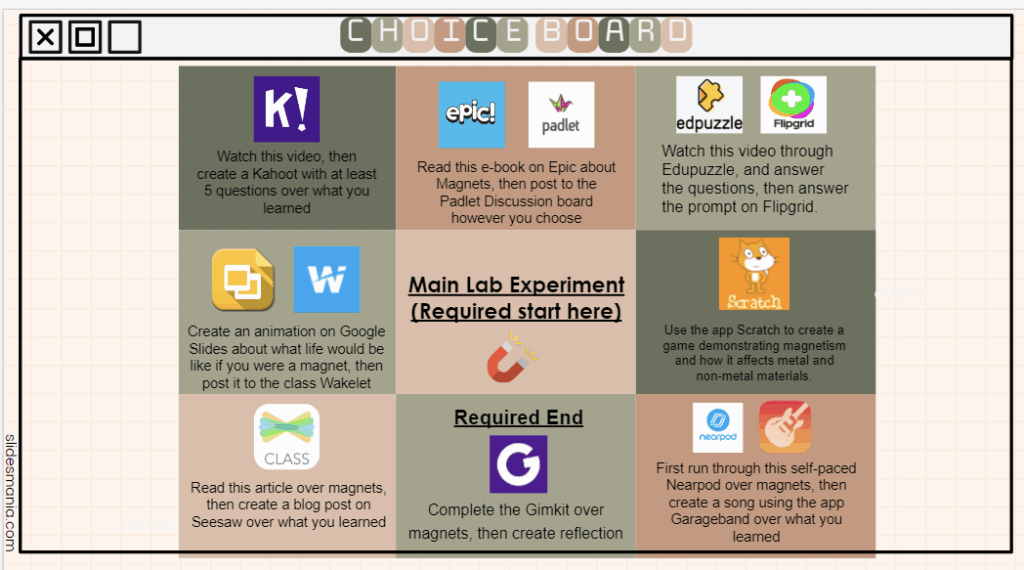

7. Create and Post Ed Tech Artifacts

This may be a no brainer, but instead of having students create different artifacts and only submit them formally to you, change it up and have them post their creations to the discussion board. This allows students who are unsure of or not confident in their creativity to get ideas and also learn in new information in a different format.

In my courses, I have had my graduate students create technology-driven projects such as infographics, multimedia presentations, choice boards, videos, podcasts, and websites.

Figure 4: Student-created choice board

I enjoyed the variance in their projects, and their encouragement about their peers’ work and their creativity. It makes it so much more enjoyable that they were sharing with others instead of just me.

But wait, there’s more…

Before signing off, I want to provide one last tip to increase your engagement and revamp your discussion board. Try combining any of the strategies mentioned above, or add elements of your own! This is “strategy smashing,” where you combine and infuse multiple strategies for successful learning experiences.

For example, you may post an assignment on a discussion board that instructs students to break into buzz groups. The buzz groups will also apply a Thinking Routine with some pre-determined questions. They meet synchronously, chat and answer the Thinking Routines questions together. Then, they each reflect on the conversation, the topic, and their experiences before posting an initial reply. To reply to others, they then use the 3C+Q reply. Don’t be afraid to try new strategies you have researched. And definitely don’t be afraid to combine these strategies!

Embracing these seven strategies can alter your online courses from static and routine discussions into dynamic learning environments where both instructors and students thrive. By fostering genuine interaction and active participation, you’ll not only enhance engagement but also cultivate a richer, more effective educational experience for your students. Doing something different in your course also leads to more innovation. So, go ahead and infuse these fresh ideas, and watch your students flourish!

Let us know your thoughts in the comments! I would love to hear from you.

Sosa, C. (2025, November 11). Seven ways to spruce up your collegiate-level online courses. TCEA TechNotes.