Mr. Rodriguez, a high school English teacher, used an AI writing assistant to give feedback on his students’ personal essays. Excited about the tool’s potential, he uploaded the essays without removing names or personal details. Months later, he was shocked to find out the AI company had used the essays to train their model. When other users prompted the AI with similar topics, it occasionally generated responses that included his students’ personal experiences, family stories, and even sensitive information about their struggles and dreams. What seemed like a harmless use of technology had exposed his students’ private lives, highlighting the critical need to anonymize student work before using AI tools.

While parts of this story are exaggerated for illustration, the concerns are real. AI systems learn from the data they process, and non-anonymized student work could shape the AI’s responses in subtle ways. Even if specific personal stories aren’t repeated word-for-word, writing styles, ideas, or themes might get incorporated into the AI’s knowledge base. This raises important issues about student privacy, data ownership, and ethical AI use in education. Teachers and schools must protect student information from both immediate breaches and the long-term impact of AI learning from student data. Anonymizing student work before using AI tools is a key step in keeping student privacy intact and ensuring responsible technology use in classrooms.

Protect Student Safety with TCEA PROTECT

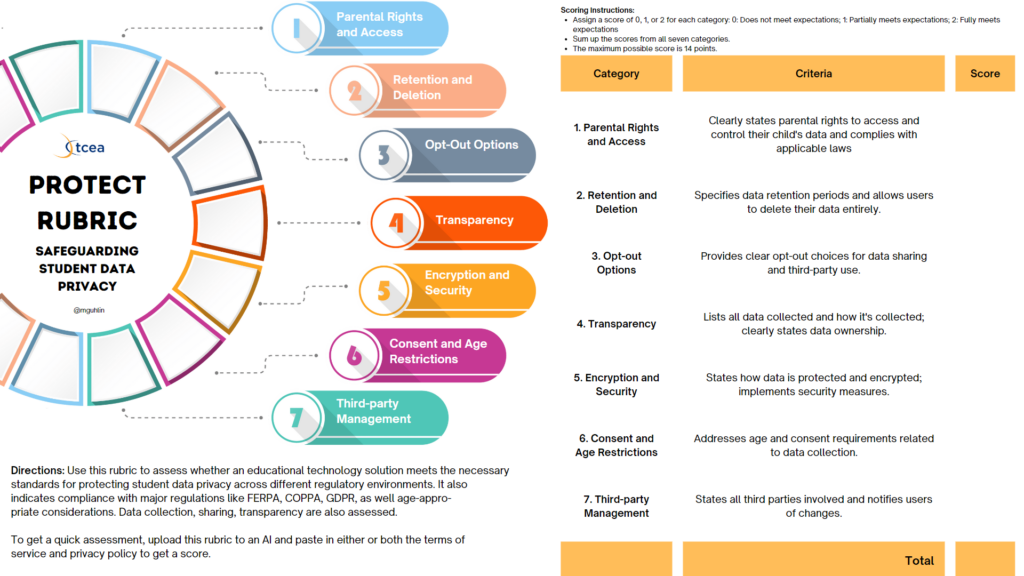

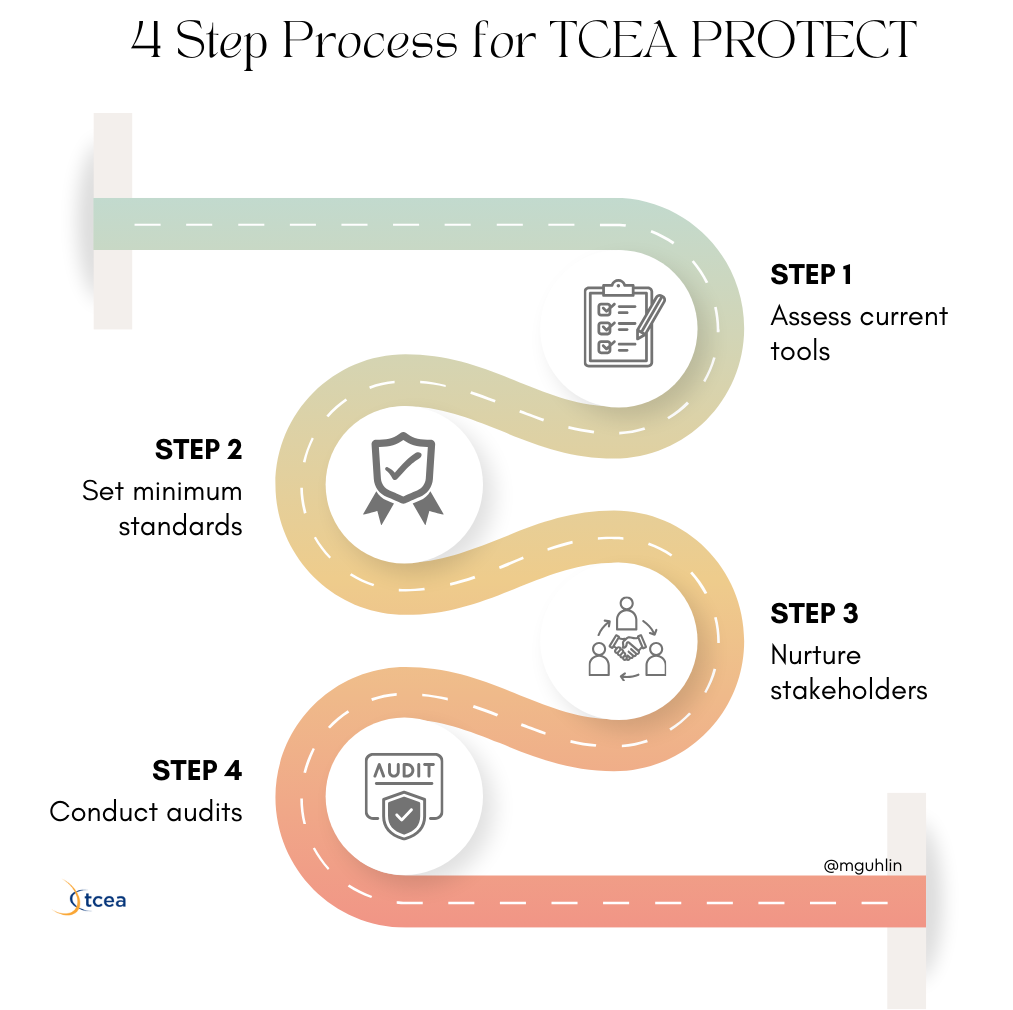

Before using any AI tool with student data, it’s important to know how that tool handles the information you provide. You can do this by utilizing our TCEA PROTECT model, which offers a practical framework for evaluating and implementing safe digital tools.

Ideally, you should be able to disable the AI’s learning feature to prevent it from using your data to improve its model. However, not all tools offer this option. Some AI providers clearly state on their websites that they don’t use user inputs for training, while others may collect and use this data by default. Always verify the data practices of the AI tool you plan to use before sharing sensitive information. If you can’t turn off the learning feature or aren’t sure about the tool’s data policies, it’s best to take precautions.

Best Practices to Protect Student Safety When Using AI

Consider these methods to anonymize student data before inputting it into AI tools:

Assign Unique Identifiers

Set up a system where each student is given a random number or code at the start of the term. This code serves as their identifier for all AI-processed work. For example, “Jane Doe” becomes “Student_A7X9.” Keep a secure list that links these codes to student names, accessible only to authorized staff. When submitting work to AI tools, use these identifiers only. This protects student identities and helps avoid any biases based on names or perceived gender or ethnicity.

Remove Personal Details

Carefully review each piece of student work before submitting it to AI tools. Look for personal information, including names, addresses, mentions of siblings, and specific personal experiences that could identify a student. Use the find-and-replace function in word processors to swap out names with identifiers. For essays or reports, read through to catch and remove any identifying information. While time-consuming, this step is crucial for protecting student privacy.

Use Data Scrubbing Tools

Data scrubbing tools can help speed up the anonymization process. These tools automatically detect and remove personal information from documents. Tools like AI Eraser, a free Chrome extension, can quickly check your data, flagging potential personal identifiers before submission. Some tools can also handle different data formats, from spreadsheets to text documents. While helpful, these tools should be used alongside manual review to catch context-specific information that might slip through.

Review Before Submission

Even after using automated tools or following anonymization procedures, a final manual review is essential. Randomly select samples of the anonymized work and check them for any remaining identifying information. Look for subtle details, like unique writing styles or personal examples, that could be linked back to a specific student. This final step is a safeguard against accidental data exposure and can improve your anonymization process over time.

Train Students on Anonymization

Teaching students about data anonymization protects their privacy and prepares them for a world where data security is increasingly important. Hold sessions explaining why anonymization matters, especially in the context of AI in education. Show students how to remove personal details from their work, focusing on both obvious and subtle identifiers. This training not only helps with anonymizing school work but also builds valuable data privacy awareness they can use in the future.

A Top Priority for Educators: Protect Student Privacy

As we continue to learn more about AI and its potential for education, it is increasingly more important to protect student privacy. Students’ data security must be a top priority. The methods outlined—assigning unique identifiers, removing personal details, using data scrubbing tools, reviewing submissions, and training students—provide a strong foundation for keeping sensitive information safe when using AI tools. By adopting these practices, educators can take advantage of AI while maintaining trust and confidentiality. The goal isn’t to avoid new technologies, but to use them responsibly and ethically. As AI becomes more prevalent in classrooms, we must prioritize student privacy, teach digital literacy, and ensure that AI integration is thoughtful and secure. In doing so, we create a safer, more equitable learning environment that equips students for a future where data awareness and privacy are critical.